Minimum Wage Effects with Non-Living Wages

I’m teaching “Economics for Non-Economists” this semester. This is an interesting experiment, and is strongly testing my belief that you can teach economics without mathematics so long as people understand graphs and tables. (It appears that people primarily learn how to read graphs and tables in mathematics-related courses. Did everyone except me know this?)

Since economics is All About Trade-offs, our textbook notes that minimum wage increases should also mean some people are not employed. Yet, as I noted to the students, in the past several decades, none of the empirical research in the United States shows this to be true. (From Card and Kreuger (1994) to Card and Kreuger (2000) to the City of Seattle, in fact, all of the evidence has run the other way, as noted by the Forbes link.)

Part of that is intuitive. If you’re running a viable business and able to generate $50 an hour, it hardly makes sense not to hire someone for $7.25, or even $9.25, to free up an hour of your time. The tradeoff is that your workers make more and your customers can afford to pay or buy more. Ask Henry Ford how that worked for him.

The generic counterargument (notably not an argument well-grounded in economic theory) was summarized accurately by Tim Worstall in one of his early attempts to hype the later-superseded initial UW study for the Seattle Minimum Wage Study Team.

[T]here is some level of the minimum wage where the unemployment effects become a greater cost than the benefits of the higher wages going to those who remain in work.

This seems intuitive in the short-term and problematic in the long term, even ignoring the sketchiness of the details and the curious assumption of an overall increase in unemployment (or at least underemployment) if you assume a rising Aggregate Demand environment. To confirm the assumptions would seem to require either a rather more open economy than exists anywhere or a rather severe privileging of capital over labor.*

On slightly more solid ground is the assumption that minimum wage should be approximately half of the median hourly wage. But then you hit issues such as median weekly real earnings not having increased much in almost forty years, while a minimum wage at the median nominal wage rate suggests that the Federal minimum wage should be somewhere between about $12.75 and $14.25 an hour. (Links are to FRED graphics and data; per hour derivations based on the 35-hour work week standard for “full-time.”)

So all of the benchmark data indicates that reasonable minimum wage increases will have virtually no effect, and none on established, well-managed businesses. The question becomes: why would that be so?

One baseline assumption of economic models is that working full-time provides at least the necessary income to cover basic expenses. Employment and Income models assume it, and it’s either fundamental to Arrow-Debreu or you have to assume that people either (a) are not rational, (b) die horrible deaths, or (c) both.

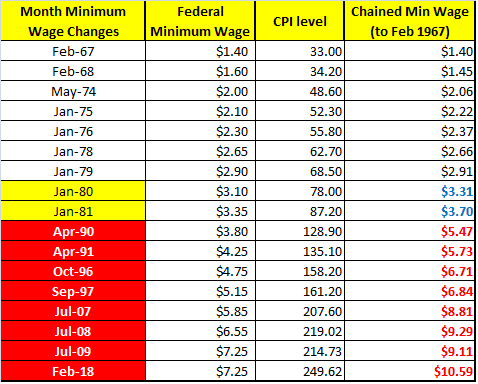

If you test that assumption, it has not obvkiously been so for at least 30 years:

The last two increases of the Carter Administration slightly lag inflation, but they are during a period of high inflation as well; the four-year plan may just have underestimated the effect of G. William Miller. (They would hardly be unique in this.)

By the next Federal increase, though—more than nine years of inflation, major deficit spending, a shift to noticeably negative net exports, and a couple of bubble-licious rounds of asset growth (1987, 1989) later—the minimum wage was long past the possibility of paying a living wage, so any relative increase in it would, by definition, increase Aggregate Demand as people came closer to being able to subsist.

The gap is greater than $1.50 an hour by the end of the 1991 increase. The 1996-1997 increase barely manages to slow the acceleration of the gap (to nearly $1.70), leaving the 10-year gap in increases to require three 70-cent increases just to get the gap back down to $1.86 by their end in 2009.

Nine years later, almost another $1.50 has been eroded, even in an inflation-controlled environment.

Card and Kreuger, in the context of increasing gap between “making minimum wage” and “making subsistence wage,” appear to have discovered not so much that minimum wage increases are not negatives to well-run businesses so much as that any negative impact of an increase, under the condition that the minimum wage does not provide for subsistence income, will be more than ameliorated by the increase in Aggregate Demand at the lower end.

My non-economist students had very little trouble understanding that.

*The general retort of “well, then, why not $100/hour” would create a severe discontinuity, making standard models ineffective in the short term and require recalibration to estimate the longer term. Claiming that such a statement is “economic reality,” then, empirically would be a statement of ignorance.

Interesting:

“One baseline assumption of economic models is that working full-time provides at least the necessary income to cover basic expenses. Employment and Income models assume it, and it’s either fundamental to Arrow-Debreu or you have to assume that people either (a) are not rational, (b) die horrible deaths, or (c) both.”

Define “basic expenses.” Ricardo and Marx both assumed – insisted in fact – that basic meant enough, and only enough, for a subsistence lifestyle which allowed the labour force to reproduce itself over time.

Your definition of basic seems to mean the lifestyle you think people ought to be able to have in a rich nation. Whichever of those is the right target to be aiming for it’s obvious that they are different targets.

“If you test that assumption, it has not obviously been so for at least 30 years”

Well, that’s slightly difficult. We only started collecting the statistic of how many people actually earn the minimum wage in, I think, 1979. When it was some 13% of the labour force. Today it’s what, 3%? 4%?

Which gives us two things. Firstly, the legal minimum wage is obviously not the only determinant of wages at the bottom of the labour market. Secondly, even by the standards you’re using about “basic” it’s not true that the minimum wage is the only method of gaining it for people, is it?

Hello Tim, long time and “no see.”

“Define “basic expenses.” Ricardo and Marx both assumed – insisted in fact – that basic meant enough, and only enough, for a subsistence lifestyle which allowed the labour force to reproduce itself over time.”

You do not appear to be a young man as do many of the ones Ken may be instructing. I am sure you have seen quite a few people laboring in the cities and the countryside. What was your observation? Do you believe they are living a life of subsistence, getting by, and able to replace themselves. Your numeric comes from the BLS. It would be nice if you identified such; but, thank goodness for Google, heh? But to my point, subsistence living is pretty much a prevailing life style for those at minimum wage and has been their longer than 30 years. You could see it and just not quantify it. What was the percentage of those living in poverty during the Eisenhower administration?

2.7% of the Labor Force making minimum wage. Is subsistence mean living in poverty? Brooking’s Hamilton using CPS data would tell you 25% of the 47.6 million living in poverty are considered in the Labor Force either working or looking for work. “A quarter of those who live in poverty are in the labor force—that is, working or seeking employment.” Would you consider 100% FPL, 138% FPL, or 200% FPL to be subsistence? You are right about minimum wage not being the only determinant of substance. The larger number does demonstrate minimum wage moves many out of poverty as defined today.

Do you agree? If not, why not?

“Basic” could be people’s (very possibly correct) intuitive assessment of what the economy they are in (US, India) should be able to pay them for a day of really demanding work (includes fast food work). Their assessment roughly corresponds I believe with the max labor price that a truly free market — fully unionized — could squeeze out of consumers.

In 1996 in Chicago, I had “mom’s” UBI: free rent and utilities forever in a spacious apartment down the block from a spacious park (spacious view), free internet, free use of my brother’s Lincoln Town Car …

… but I was down to $10/hr (today’s money) driving a taxi Chicago .

Between 1981 and early 1996 the City of Chicago allowed one thirty cent per mile raise in the taxi meter …

… at which 1990 midpoint the city began building subways to both airports, opened up unlimited limo plates, put on free trolleys between all the hot spots downtown (now gone) — and 40% more taxis!

By early 1996 I was living in a residence hotel in San Francisco (20 12th Street) where the electricity in your room went of four or five times a day (somebody overloading circuit breaker — you put your single outlet on the biggest battery you could afford), the elevator out once a week (people just fine if less colorful than the Million Dollar Hotel movie) …

… but I was making $20/hr (since 2004 when I retired, SF has doubled taxis). I’d figure out the rest later.

See the way those people, those (poor) immigrants hustle at McDonald’s. Americans wont scramble like that for $10/hr — maybe not for $15/hr.

Actually half will work no matter what — ask Prof. Martin Sanchez-Jankowski at Berkeley.

100,000 Chicago gang age males are in street gangs. Fifty-percent ain’t one-percenters but the American born wont scramble over a stove for next-to-nothing. UBI won’t get most to normal employment — just wont — wont clean up Chicago’s south side and west side. $20/hr will.

https://www.cbsnews.com/news/gang-wars-at-the-root-of-chicagos-high-murder-rate/

Why Not Hold Union Representation Elections on a Regular Schedule?

Andrew Strom — November 1st, 2017

Point being: whatever price collective bargaining can squeeze out of the ultimate consumers of production should pretty closely fit the intuitive definition of “basic income” it takes to get Americans to go to work — or didn’t you know gentle reader, that poverty in Chicago anyway is a result of too low pay causing (legal-non gang) unemployment, not too high. :-O

BTW, the min wage like EITC (which transfers 1/2 of 1% of income when 40% of Am labor earns below what we think the min should be) helps who it helps but is not the “big answer.”

Let’s imagine (a thought experiment) that wage increases caused prices at McDonald’s, Walgreen’s and Walmart to go up 10% — possibly causing 10% loss of sales until the next payday that is.

At 33%, 12% and 7% labor costs respectively, that would mean wages had jumped (approximately) 33%, 80% and 150% respectively.

I figure that if the min wage has not reached the level that wages would have reached in a truly free labor market — that is with collective bargaining — that the trade off between job loss and higher pay is all in favor of higher pay.

That is assuming there is any job loss (at the low wage level). But if low wage earners spend their new wage gains proportionately more at lower wage businesses then likely sales at lower wage business will climb. Sales may actually decline at some higher wage businesses where the new wage gains used to be spent. Everybody may be looking in the wrong place for job losses.

The Minimum Wage Hike Is Good for Business

Bill Phelps Aug 3, 2016

https://www.forbes.com/sites/groupthink/2016/08/03/the-minimum-wage-hike-is-good-for-business/#2ce0ee386307

Seattle min wage study showed loss of 16,000 jobs out 40,000 below $13/hr. Horrors?! Loss of only 6,000 out of 92,000 below $19/hr. First group ($13/hr) included in second. Bracket creep? 44,000 added to 292,000 above $19/hr. Bracket leap?!

http://angrybearblog.strategydemo.com/2017/06/seattle-minimum-wage.html

… 2.6% unemployment rate

https://www.google.com/search?q=seattle+unemployment+rate&ie=utf-8&oe=utf-8

… $80,000 median household income (up $9,374 since 2014 — US HH median $56,000)

http://www.seattletimes.com/seattle-news/data/80000-median-wage-income-gain-in-seattle-far-outpaces-other-cities/

… more construction cranes working than any other US city

Seattle 58

Los Angeles 36

Denver 35

Chicago 34

Portland 32

San Francisco 22

Washington, DC 20

New York 18

Honolulu 10

Austin 9

Boston 7

Phoenix 5

Illustrated map: http://ritholtz.com/2017/07/where-the-cranes-are/?utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+TheBigPicture+%28The+Big+Picture%29

From Ms. Foundation book Raise the Floor — p. 44, table 2-3

Annual Minimum Needs Budget Without Employment Health Benefits (1999 dollars table adjusted for 2018 dollars at http://cpiinflationcalculator.com/ — health care skyrocketed since 1999 in mind)

Housing 11,505

Health Care 9,486

Food 6,916

Child Care 6,817

School Ave Care 0

Transportation 3,058

Clothing and Personal

Expenses 1,699

Household Expenses 727

Telephone 818

Subtotal Before Taxes 41,028

Payroll Tax 3,139

Federal Tax (including

credits) 1,716

State Tax (including

credits) 615

Total 46,501

I forgot to specify above: two adults, one child

“subsistence living is pretty much a prevailing life style for those at minimum wage and has been their longer than 30 years. ”

That’s not subsistence. Which is, in fact, $1.90 a day. The World Bank definition of absolute poverty. And yes, that’s per person, at modern American prices.

US minimum wage is nowhere near subsistence.

US minimum wage pouts you in the top 10% of global incomes (full year, full time, of about $14,000 a year). That’s, obviously, after we;ve adjusted for price differences across countries, that’s PPP.

I agree this isn’t a lot, sure, I’d like it to be a higher income – although not a higher minimum wage – but it’s simply not a subsistence wage.

“Is subsistence mean living in poverty?”

Subsistence means staying alive. Just. Yes, of course it damn well means in poverty.

Tim:

Where in the US, which is where we live, would you subsist on $1.90 a day without assistance and continuously? You can agree it is not a lot and you also can not do it. $14,000 is not quite 100% FPL. We live in a free market nation which demands more than $1.90/day to subsist without obtaining assistance.

Tim,

What’s the point of dueling definitions about what is “objectively” a subsistence wage in the US or elsewhere? The only question we should concern ourselves with should be: Are 2018’s American lower wage employees getting paid as much as the (consumer) market would be willing to pay them if they have — really, if they had — the ability to withhold their labor to strike a fair bargain, right? Yes? No?

The only way I know to turn 2018’s American labor market’s “price takers” into “price negotiators” is to organize today’s price takers into collective bargaining labor unions. Got any better ideas?

No need to guess what today’s lower paid labor is worth; let the market truly decide. You shouldn’t even want to argue with that, right?

Pretty safe though to guess that today’s minimum wage has fallen crazily below what a truly free market would bear:

yr..per capita…real…nominal…dbl-index…%-of

(2013 dollars [that’s thirteen])

68…15,473….10.74..(1.60)……10.74……100%

69-70-71-72-73

74…18,284…..9.43…(2.00)……12.61

75…18,313…..9.08…(2.10)……12.61

76…18,945…..9.40…(2.30)……13.04……..72%

77

78…20,422…..9.45…(2.65)……14.11

79…20,696…..9.29…(2.90)……14.32

80…20,236…..8.75…(3.10)……14.00

81…20,112…..8.57…(3.35)……13.89……..62%

82-83-84-85-86-87-88-89

90…24,000…..6.76…(3.80)……16.56

91…23,540…..7.26…(4.25)……16.24……..44%

92-93-94-95

96…25,887…..7.04…(4.75)……17.85

97…26,884…..7.46…(5.15)……19.02……..39%

98-99-00-01-02-03-04-05-06

07…29,075…..6.56…(5.85)……20.09

08…28,166…..7.07…(6.55)……19.45

09…27,819…..7.86…(7.25)……19.42……..40%

10-11-12-13-14-15-16-17-18

Just to hammer it all — undeniably? — home: 2007 US minimum wage under-performed Malthus. While US population ballooned 50% the min wage dropped almost 50% — instead of the 33% it would only have fallen pre-industrialization!!! Nobody can realistically deny crazy, right?

Denis:

I would say anyone could agree a subsistence wage of $1.90 in the US is not subsistence and is a poke in the eye. Tim knows this as well as any other person who has labored or has seen what has taken place. That is really not the argument.

You are at the point today where much manual labor has moved outside of the country to labor-intensive countries. We are left with capital intensive business. The same is happening with service jobs. Labor intensive and well paying service jobs such as accounting, invoicing, call center, etc. is moving off shore to places like India. PGL’s post on Baumcol’s disease (which Tim also wrote about) is pretty much on the money. The cycle is repeating even as the actual amount of Direct Labor within manufacturing or service and cost has lessened over the years.

Denis your numbers are great. Don’t get me wrong.

Clarification of last statement :

EARLY 2007 minimum wage — when it was STILL $5.15 — was almost 50% less than the 1968 minimum: $5.79 v. $10.74 (1913 dollars) — per capita income nearly 100% higher, “post-industrial revolution!”

http://www.cpiinflationcalculator.com?y1=2007&y2=2013&i=5.15

Sure:

“The only way I know to turn 2018’s American labor market’s “price takers” into “price negotiators” is to organize today’s price takers into collective bargaining labor unions. Got any better ideas?”

Full employment. Works wonders – that’s a part of economics even Karl Marx managed to get right. It’s also why Walmart, Target etc raised their own starting wages by a $1 an hour and all that a year or two back. They couldn’t get the labour they wanted at the lower price. So, they had to raise the price on offer.

Markets really do work, ya know?

“We live in a free market nation which demands more than $1.90/day to subsist without obtaining assistance.”

Then people should gain assistance then, shouldn’t they?

And yes, I do, often, make exactly this argument. Market wages are market wages. We might not like what they are and we can even desire to change the outcome. Excellent, now all we’re arguing about is how do we change that outcome?

Instead of screwing with the market with minimum wages we should be making tax and benefit transfers. After all, it is we, collectively, who insist that people whose labour isn’t worth very much should be getting more than their labour is worth. Thus it should be us, collectively, who are paying for their higher incomes.

That really does mean everyone gets taxed to provide those higher incomes through assistance. The answer, instead of the minimum wage, is Section 8, EITC, SNAP and all the rest. Sure, I argue about details of such assistance, I think it would be much better as a straight cash sum. Even a universal basic income. But the idea of redistribution up to some minimum standard I’m just fine with, support even.

One great advantage of the assistance route is that everyone can see who much it is costing. Which means that people can see and feel the cost of their commitment to those higher incomes for other people. A good test of whether they actually support those higher incomes – and what level of such they do support. If they’re willing to pay for an income of level $x for everyone well, great, good for them and let’s do it.

A very basic part of my world view is that markets work. Even when they don’t (say, climate change) the answer is to include our problem in markets (say, a carbon tax to include the externality in market prices).

Not-markets don’t work.

So, let the market rip in all its red in tooth etc glory. We can then, if we so desire, tax and redistribute to alter those outcomes we don’t like.

Essentially this is the social democratic argument – tax the market outcome. Instead of the socialist argument, alter the market itself. It’s also what the Nordics – Sweden, Denmark etc – do. They’re rather more free market than the US or UK by the usual rankings (Heritage, Fraser etc) and they’re also higher tax and more redistributive. Do note they have *less* progressive tax systems than the US even if larger government and more redistribution.

They also work, work very well. They’re not my desired society – the list of market outcomes which need correction through tax and redistribution I regard as rather shorter than most people do. The Hong Kong end of the spectrum suits my prejudices rather better.

But there’s no doubt that the Nordics do in fact work. Have a very free market economy then tax it. Not try to plan the market itself.

“Market wages are market wages.” Let’s start by agreeing about that.

Do you differentiate between take-it-or-leave-it labor wages — and — collectively bargained labor wages? If market wages are market wages it shouldn’t make any difference to you. Employers sure think there will be a very different wage price outcome, depending, or they wouldn’t illegally put up such a fierce fight against any attempt to collectively bargain at most workplaces.

Karl Marx is supposed to have opined that America doesn’t need socialism because it has labor unions (saith one commenter at Economist’s View anyway).

All I want is a market driven labor price — because labor cannot charge too much or the consumer will walk away. The bottom 80% of the US labor force I would guess is not getting the max the consumer would pay.

EITC at present is shifting 1/2 of 1 percent of income around while 40% of our labor force is earning less than what liberals feel the minimum wage should be ($15/hr). If McDonald’s can pay $15/hr at 33% labor costs, Target and Walgreen’s should be able to pay $20/hr at 10-15% labor costs and (blessedly efficient) Walmart can pay $25/hr.

“Walmart and Target” raised the wage a dollar and hour — I think the consumer will pay more than that.

Let’s imagine (a thought experiment) that wage increases caused consumer prices at McDonald’s, Walgreen’s and Walmart to rise 10% — possibly causing 10% loss of sales (until the next payday that is — when we find out where the newly flush employees spend their money) At 33%, 12% and 7% labor costs respectively, that would mean wages had jumped 33%, 80% and 150% respectively at their firms.

Liberals for the most part (American liberals that is) don’t seem to have caught on to essential need to make employees price negotiators. This includes Warren and Bernie. Denmark however not only has negotiated fast food labor prices but also negotiates it on a centralized (sector-wide) basis.

There isn’t even any law mandating sector-wide bargaining in Denmark. Like most of Europe, Denmark doesn’t need laws — collective bargaining is either in its culture (don’t know details too much) or labor union density is such that nobody would think to extort employees out of the ability to collectively bargain. Something that slipped away from us here.

Laws on this side of the water prohibit extortion (firing organizers and joiners) but have near zero enforcement teeth. The US badly needs a legal structure to enable (the normal in any other modern economy) collective bargaining.

* * * * * *

Rebuilding Labor Law from a Clean Slate — April 18th, 2018 – Benjamin Sachs

“In the weeks ahead, we’ll be sharing more about a project that Sharon [Block] and I are launching at Harvard Law School, “Rebalancing Economic and Political Power: A Clean Slate for the Future of Labor Law.” With support from the Ford Foundation and joined by a wide array of participants, our goal is to develop a policy agenda that rebuilds American labor law from a clean slate.”

https://onlabor.org/rebuilding-labor-law-from-a-clean-slate/

* * * * * *

I am now in the process of spamming the perfect fit idea below — nothing radical about elections — across the county — to newspapers in 100,000 circulation zone in big states, 50,000 small states, 25,000 X 3 = 75,000 (ND), and any person or organization who might be interested. Last week did journalists in MN, WI, MI, OH, IN, IL.

Why Not Hold Union Representation Elections on a Regular Schedule?

Andrew Strom — November 1st, 2017

https://onlabor.org/why-not-hold-union-representation-elections-on-a-regular-schedule/

I suggest on a one, three or five year schedule; plurality rules in each workplace.

* * * * * *

To raise most our workforce to “price negotiators” our coming blue wave Congress simply need mandate union certification and re-certification elections at every private workplace; every one, three or five years, plurality rules on the latter. And let the truly free at last market rip.

Denis:

Something for you to read. https://www.inturn.co/taxonomy/term/60

“Let’s imagine (a thought experiment) that wage increases caused consumer prices at McDonald’s, Walgreen’s and Walmart to rise 10%”

That doesn’t really work, sorry, it doesn’t. The major consumers of the output of low wage workers are low wage workers. Walmart’s target market isn’t exactly the Porsche driving classes now, is it?

Thus consumer price increases driven by low end wage rises fall disproportionately upon those getting those low end wages. It’s entirely possible to argue a bit – quite a lot actually – about the exact percentages and proportions but the general idea is well established in the evidence.

A rise in low end consumer prices driven by low end wage rises doesn’t make low end workers all that much, if at all, better off.

Tim:

Really? Direct Labor is the driver of costs and prices at WalMart? There are other much larger factors coming into play and making up a greater cost than direct labor.

John:

Having worked in Asia over the last twenty – something years. Does Shenzhen have Social Security, Workmans Comp., Child Labor laws, EPA Regs, OSHA, Medicare, Medicaid, etc.? They do not and the most they do have in the plants I have been in is a doctor or nurse on staff, two sure meals a day, a snack at night if you work over time, and transportation back and forth to work. Your libertarian freedom should not impinge upon the freedom of others. We paid for you too.

It costs me $3600 to ship a 48 footer to China and $4300 to ship a 48 footer out of China.

USPS honors whatever one pays in a foreign country to ship to the US regardless of what it costs here. What costs me pennies to mail in Beijing costs more here. So what. The South is well known for stiffing its people. Arkansas did expansion of Medicaid and many southern states did not.

Your approach is precisely why it becomes necessary for the public sector to intercede in wages, insurance, etc. The work houses are gone . . .